Cybersecurity Snapshot: Top Advice for Detecting and Preventing AI Attacks, and for Securing AI Systems

As organizations eagerly adopt AI, cybersecurity teams are racing to protect these new systems. In this special edition of the Cybersecurity Snapshot, we round up some of the best recent guidance on how to fend off AI attacks, and on how to safeguard your AI systems.

Key takeaways

- Developers are getting new playbooks from groups like OWASP and OpenSSF to lock down agentic AI and AI coding assistants.

- Hackers are broadly weaponizing AI, using conventional LLMs to scale up phishing and new agentic AI tools to run complex, automated attacks.

- To fight fire with fire, organizations are unleashing their own agentic AI tools to hunt for threats and boost their cyber defenses.

In case you missed it, here’s fresh guidance for protecting your organization against AI-boosted attacks, and for securing your AI systems and tools.

1- OWASP: How to safeguard agentic AI apps

Agentic AI apps are all the rage because they can act autonomously without human intervention. That’s also why they present a major security challenge. If an AI app can act on its own, how do you stop it from going rogue or getting hijacked?

If you’re building or deploying these “self-driving” AI apps, take a look at OWASP’s new “Securing Agentic Applications Guide.”

Published in August, this guide gives you “practical and actionable guidance for designing, developing, and deploying secure agentic applications powered by large language models (LLMs).”

It's a guide aimed at the folks in the trenches, including developers, AI/ML engineers, security architects, and security engineers. Topics include:

- Technical security controls and best practices

- Secure architectural patterns

- Common threat mitigation strategies

- Guidance across the development lifecycle (design, build, deploy, operate).

- Security considerations for components such as LLMs, orchestration middleware, memory, tools, and operational environments

It even provides examples of how to apply security principles in different agentic architectures.

For more information about agentic AI security:

- “Frequently Asked Questions About Model Context Protocol (MCP) and Integrating with AI for Agentic Applications” (Tenable)

- “Cybersecurity in 2025: Agentic AI to change enterprise security and business operations in year ahead” (SC World)

- “How AI agents could revolutionize the SOC — with human help” (Cybersecurity Dive)

- “Three Essentials for Agentic AI Security” (MIT Sloan Management Review)

- “Beyond ChatGPT: The rise of agentic AI and its implications for security” (CSO)

2 - Anthropic: How an attacker turned Claude Code into a master hacker

Think that OWASP guide is just theoretical? Think again. In a stark example of agentic AI's potential for misuse, AI vendor Anthropic recently revealed how a sophisticated cyber crook weaponized its Claude Code product to “an unprecedented degree” in a broad extortion and data-theft campaign.

It’s a remarkable story, even by the standards of the AI world. The hacker used this agentic AI coding tool to:

- Automate reconnaissance.

- Harvest victims’ credentials.

- Breach networks.

- Make tactical and strategic decisions, such as choosing which data to steal, and crafting “psychologically targeted” extortion demands.

- Crunch stolen financial data to set the perfect ransom amounts.

- Generate “visually alarming” ransom notes.

The incident takes AI-assisted cyber crime to another level.

“Agentic AI has been weaponized. AI models are now being used to perform sophisticated cyberattacks, not just advise on how to carry them out,” Anthropic wrote in an August blog post.

This new breed of agentic AI abuse makes security exponentially harder because the tool is autonomous, so it adapts to defenses in real time.

(Image generated by Tenable using Google Gemini)

By the time Anthropic shut the attacker down, at least 17 organizations had been hit, including healthcare, emergency services, government, and religious groups.

Anthropic says it has since built new classifiers – automated screening tools – and detection methods to catch these attacks faster.

This incident, which Antropic labeled “vibe hacking,” is just one of 10 real-world use cases included in Anthropic’s “Threat Intelligence Report: August 2025” that detail abuses of the company’s AI tools.

Anthropic said it hopes the report helps the broader AI security community strengthen their own defenses.

“While specific to Claude, the case studies … likely reflect consistent patterns of behaviour across all frontier AI models. Collectively, they show how threat actors are adapting their operations to exploit today’s most advanced AI capabilities,” the report reads.

For more information about AI security, check out these Tenable Research blogs:

- “Frequently Asked Questions About DeepSeek Large Language Model (LLM)”

- “Frequently Asked Questions About Model Context Protocol (MCP) and Integrating with AI for Agentic Applications”

- “Frequently Asked Questions About Vibe Coding”

- “AI Security: Web Flaws Resurface in Rush to Use MCP Servers”

- “CVE-2025-54135, CVE-2025-54136: Frequently Asked Questions About Vulnerabilities in Cursor IDE (CurXecute and MCPoison)”

3 - CSA: Traditional IAM can’t handle agentic AI identity threats

The Anthropic attack, in which an agentic AI tool stole credentials, highlights a fundamental vulnerability: managing identities for autonomous systems. What happens when you give these autonomous AI systems the keys to your organization’s digital identities?

It’s a question that led the Cloud Security Alliance (CSA) to develop a proposal for how to better protect digital identities in agentic AI tools.

In its new paper "Agentic AI Identity and Access Management: A New Approach," published in August, the CSA argues that traditional approaches for identity and access management (IAM) fall short when applied to agentic AI systems.

“Unlike conventional IAM protocols designed for predictable human users and static applications, agentic AI systems operate autonomously, make dynamic decisions, and require fine-grained access controls that adapt in real-time,” the CSA paper reads.

Their solution? A new, adaptive IAM framework that ditches old-school, predefined roles and permissions for a continuous, context-aware approach.

The framework is built on several core principles:

- Zero trust architecture

- Decentralized identity management

- Dynamic policy-based access control

- Continuous monitoring

The CSA’s proposed framework is built on “rich, verifiable” identities that track an AI agent’s capabilities, origins, behavior, and security posture.

Key components of the framework include an agent naming service (ANS) and a unified global session-management and policy-enforcement layer.

For more information about IAM in AI systems:

- “A New Identity: Agentic AI boom risks busting IAM norms” (SC World)

- “Exposure Management in the realm of AI” (Tenable)

- “A Novel Zero-Trust Identity Framework for Agentic AI: Decentralized Authentication and Fine-Grained Access Control” (researchers from several universities and companies, including MIT and AWS, via Arvix.org)

- “Tenable Cloud AI Risk Report 2025” (Tenable)

- “Enterprises must rethink IAM as AI agents outnumber humans 10 to 1” (VentureBeat)

4 - OpenAI: Attackers abuse ChatGPT to sharpen old tricks

While agentic AI attacks illustrate novel AI-abuse methods, attackers are also misusing conventional AI chatbots for more pedestrian purposes.

For example, as OpenAI recently disclosed, attackers have recently attempted to use ChatGPT to refine malware, set up command-and-control hubs, write multi-language phishing emails, and run cyber scams.

In other words, these attackers weren’t trying to use ChatGPT to create sci-fi-level super-attacks, but mostly trying to amplify their classic scams, according to OpenAI’s report “Disrupting malicious uses of AI: an update.”

“We continue to see threat actors bolt AI onto old playbooks to move faster, not gain novel offensive capability from our models,” OpenAI wrote in the report, published in October.

The report identifies several key trends among threat actors:

- Using multiple AI models

- Adapting their techniques to hide AI usage

- Operating in a "gray zone" with requests that are not overtly malicious

Incidents detailed in the report include the malicious use of ChatGPT by:

- Cyber criminals from Russian-speaking, Korean-language, and Chinese-language groups to refine malware, create phishing content, and debug tools

- Authoritarian regimes, specifically individuals linked to the People's Republic of China (PRC), to design proposals for large-scale social media monitoring and profiling, including a system to track Uyghurs

- Organized scam networks, likely based in Cambodia, Myanmar, and Nigeria, to scale fraud by translating messages and creating fake personas

- State-backed influence operations from Russia and China to generate propaganda, including video scripts and social media posts

“Our public reporting, policy enforcement, and collaboration with peers aim to raise awareness of abuse while improving protections for everyday users,” OpenAI wrote in the statement “Disrupting malicious uses of AI: October 2025.”

For more information about AI security, check out these Tenable resources:

- “2025 Cloud AI Risk Report: Helping You Build More Secure AI Models in the Cloud” (on-demand webinar)

- “Cloud & AI Security at the Breaking Point — Understanding the Complexity Challenge” (solution overview)

- “Exposure Management in the realm of AI” (on-demand webinar)

- “Expert Advice for Boosting AI Security” (blog)

- “AI Is Your New Attack Surface” (on-demand webinar)

5 - Is your AI coding buddy a security risk?

Hackers aren't the only ones using AI to code. Your own developers are, too. But the productivity gain they get from AI coding assistants can be costly if they’re not careful.

To help developers with this issue, the Open Source Security Foundation (OpenSSF) published the “Security-Focused Guide for AI Code Assistant Instructions.”

“AI code assistants are powerful tools,” reads the OpenSSF blog “New OpenSSF Guidance on AI Code Assistant Instructions.” “But they also create security risks, because the results you get depend heavily on what you ask.”

The guide, published in September, provides developers tips and best practices on how to prompt these AI helpers to reduce the risk that they’ll generate unsafe code.

Specifically, the guide aims to ensure that AI coding assistants consider:

- Application code security, such as validating inputs and managing secrets

- Supply chain safety, such as selecting safe dependencies and using package managers

- Platform or language-specific issues, such as applying security best practices to containers

- Security standards and frameworks, such as those from OWASP and the SANS Institute

“In practice, this means fewer vulnerabilities making it into your codebase,” reads the guide.

For more information about the cyber risks of AI coding assistants:

- “Hackers setting traps for vibe coders: AI assistants can deliver malware” (Cybernews)

- “Cybersecurity Risks of AI-Generated Code” (Georgetown University)

- “Security risks of AI-generated code and how to manage them” (TechTarget)

- “AI-generated code risks: What CISOs need to know” (ITPro)

- “GitLab's AI Assistant Opened Devs to Code Theft” (Dark Reading)

6 - PwC: Cyber teams can’t get enough AI

Finally, here’s how organizations are fighting back. They’re leaning heavily into AI to strengthen their cyber defenses, including by prioritizing the use of defensive agentic AI tools.

That’s according to PwC’s new “2026 Global Digital Trust Insights: C-suite playbook and findings” report, based on a global survey of almost 4,000 business and technology executives.

“AI’s potential for transforming cyber capabilities is clear and far-reaching,” reads a PwC article with highlights from the report, published in October.

For example, organizations are prioritizing the use of AI to enhance how they allocate cyber budgets; use managed cybersecurity services; and address cyber skills gaps.

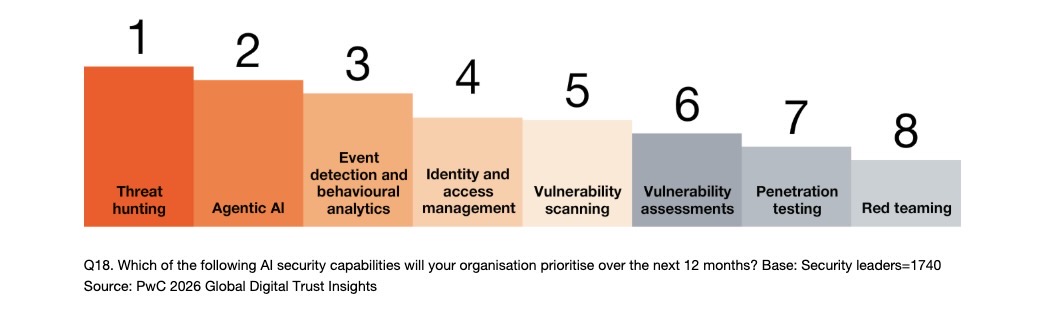

With regards to respondents' priorities for AI cybersecurity capabilities in the coming year, threat hunting ranked first, followed by agentic AI. Other areas include identity and access management, and vulnerability scanning / vulnerability assessments.

AI security capabilities organizations will prioritize over the next 12 months

Meanwhile, organizations plan to use agentic AI primarily to bolster cloud security, data protection, and security operations in the coming year. Other agentic AI priority areas include security testing; governance, risk and compliance; and identity and access management.

“Businesses are recognising that AI agents — autonomous, goal-directed systems capable of executing tasks with limited human intervention — have enormous potential to transform their cyber programmes,” reads the report.

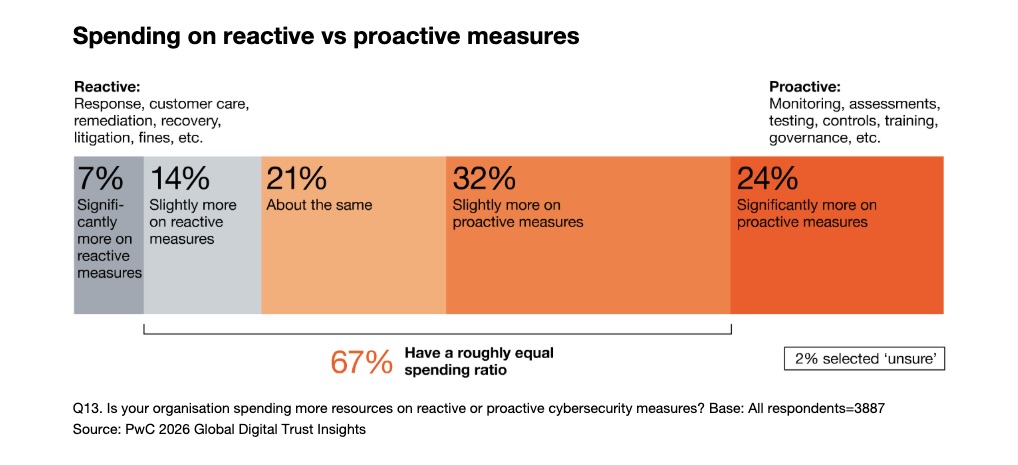

Beyond AI, the report also urges cyber teams to prioritize prevention over reaction. Proactive work like monitoring, assessments, testing, and training is always cheaper than the crisis-mode alternative of incident response, remediation, litigation, and fines. Yet, only 24% of organizations said they spend “significantly more” on proactive measures.

Other topics covered in the report include geopolitical risk; cyber resilience; the quantum computing threat; and the cyber skills gap.

For more information about AI data security, check out these Tenable resources:

- “Harden Your Cloud Security Posture by Protecting Your Cloud Data and AI Resources” (blog)

- “Tenable Cloud AI Risk Report 2025” (report)

- “New Tenable Report: How Complexity and Weak AI Security Put Cloud Environments at Risk” (blog)

- “Securing the AI Attack Surface: Separating the Unknown from the Well Understood” (blog)

- “The AI Security Dilemma: Navigating the High-Stakes World of Cloud AI” (blog)

Check back here next Friday, when we’ll share some of the best AI risk-management and governance best practices from recent months.

- Cloud

- Cybersecurity Snapshot